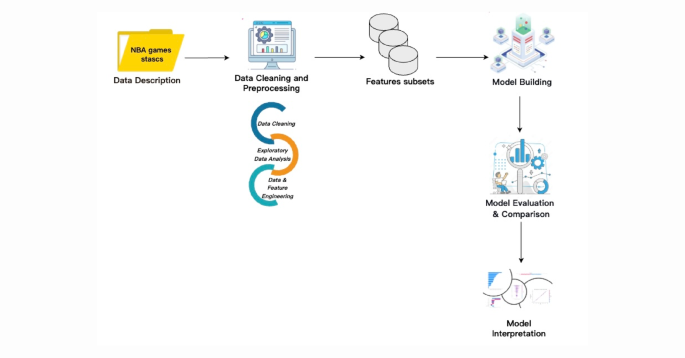

This research seeks to establish a predictive framework for NBA game results through a machine-learning approach utilizing the Stacking ensemble method. This section outlines the materials and methodologies utilized to reach our goal. Figure 1 illustrates the overarching workflow of the suggested framework. The entire experimental setup was executed in a Jupyter Notebook environment using Python, with various key libraries such as NumPy, Pandas, Scikit-learn, and Matplotlib employed for data handling, model creation, and visualizing outcomes.

Dataset

The datasets for this study were sourced from the official NBA website (https://www.nba.com), which offers a rich array of multi-dimensional data including player and team statistics, advanced efficiency metrics, spatial tracking information, lineup performance, and salary details. These datasets are frequently utilized in tactical analyses and machine learning applications.

The focus is on game-level performances and results from the regular seasons of 2021–2022, 2022–2023, and 2023–2024, compiling data from all 1,230 games per season, resulting in 3,690 games and 7,380 samples (covering both home and away teams). The dataset includes 20 feature variables, such as total field goals and three-point shots, with the game outcome (win or loss) designated as the target variable. A detailed overview of the variables is included in Table 2.

Data Processing

For optimal training of the model, the game outcome variable for all regular-season games was encoded as a binary classification variable, designating a win as 1 and a loss as 0. This binary encoding enhances the efficacy of machine learning models in differentiating between game results.

The dataset is balanced, with equal samples of wins and losses. Specifically, among the 7,380 samples, there are 3,690 observations for each outcome (win and loss), as shown in the summary statistics in Table 3.

Dataset Partitioning

To mitigate model overfitting and guarantee reliable predictive accuracy, the dataset was randomly divided into training and testing subsets. Given that the regular-season games are mutually independent, random sampling was utilized for this division.

In particular, 80% of the data was designated as the training set, while 20% served as the testing set. The training set was essential for developing the model, while the testing set was essential for assessing its performance, as detailed in Table 4.

Feature Selection

Feature selection is a vital process that greatly influences the model’s accuracy and its generalizability. To mitigate multicollinearity among the variables—which can hinder model interpretability—key features were carefully vetted. Exploratory Data Analysis (EDA) techniques were used to compute the Pearson correlation coefficients among the features, as visualized in the correlation heatmap in Fig. 2. The absence of pronounced multicollinearity permitted the retention of all 20 features for the training and analysis.

Model Building

Post data preprocessing, this study employed the Scikit-Learn toolkit to train machine learning models. The initial division into training and testing sets allowed for systematic performance evaluation. Various classifiers were employed to train and assess the model’s capabilities, facilitating a comprehensive comparison of their predictive performance.

Baseline models were constructed through 5-fold cross-validation, a recognized method to ensure valid performance estimation. The model depicting the highest average accuracy across the folds was chosen for the base layer of the Stacking ensemble framework, with the accuracy metric defined formally in Eq. 1.