A Voice Without Sound

Our inner voice serves as a private mental space for our thoughts. But what implications arise when machines begin to perceive this inner dialogue? A pioneering study from Stanford University’s BrainGate2 project has successfully decoded “inner speech” — the phenomenon of self-talk — from electrical signals in the brain.

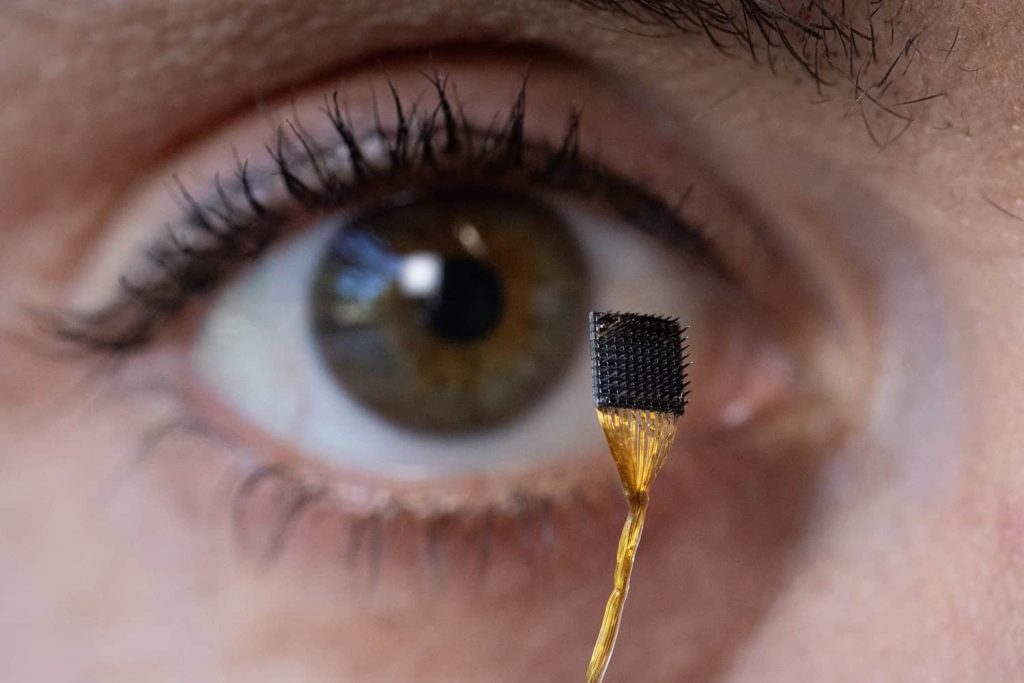

Brain-computer interfaces (BCIs) hold great promise for individuals suffering from paralysis or advanced ALS. Historically, BCIs have enabled patients to control robotic limbs or drones solely through thought. Existing communication BCIs required users to make efforts to speak, generating electrical signals in the motor cortex that AI could then interpret into words.

This process can be exhausting. Erin Kunz, the lead author, highlighted that decoding inner speech could simplify communication, reducing mental fatigue. The team aimed to decipher brain signals linked to silent word imagination without physical movement, and they found success.

Decoding Inner Speech

In tests with four participants who had ALS or brainstem strokes, microelectrodes placed in the motor cortex captured unique firing patterns when they imagined phrases such as “I don’t know how long you’ve been here.” The team utilized AI models designed to detect and interpret these neural patterns, achieving real-time decoding from a vocabulary of 125,000 words with accuracy rates exceeding 70%. One participant, prior to the implants, communicated solely through eye movements.

Kunz expressed excitement over understanding brain activity related to silent speech for the first time. However, ethical concerns arise from this breakthrough due to the potential for inadvertently capturing unintended thoughts.

Ethical Concerns and Safeguards

Researchers learned that the BCI sometimes detected unintentional inner speech. For instance, when participants mentally counted shapes, the device picked up these thoughts. Ethicist Nita Farahany cautioned that the division between private and public thought might be less distinct than anticipated. To mitigate this, the Stanford team established AI protocols that ignore inner speech unless prompted and introduced a specific “unlock” phrase to activate the system, achieving nearly 99% accuracy.

The Future of Brain Transparency

Currently, these brain implants are utilized only in clinical settings with FDA supervision. Farahany warned that consumer BCIs, such as wearable technology, could eventually share these same decoding capabilities without adequate protections. If such technology falls into the wrong hands, it could severely threaten mental privacy.

Though the technology isn’t yet capable of straightforward mind-reading, researchers note its limitations in spontaneous thought processing. Cognitive neuroscientist Evelina Fedorenko remarked that much of human thought doesn’t translate cleanly into language.

Looking Ahead

The findings underscore the close link between thinking and speaking, revealing that the motor cortex also encodes imagined speech in familiar neural patterns. As BCIs evolve, so do the risks associated with mental privacy and information leakage. Kunz remains optimistic, highlighting the potential for BCIs to restore fluent communication through inner speech.

As we explore this new territory, it becomes crucial to understand and safeguard our mental privacy against unwanted intrusion. The future holds both promise and challenge as we tread into uncharted realms of brain technology.