Overview of Snapshot Optical Image Generation

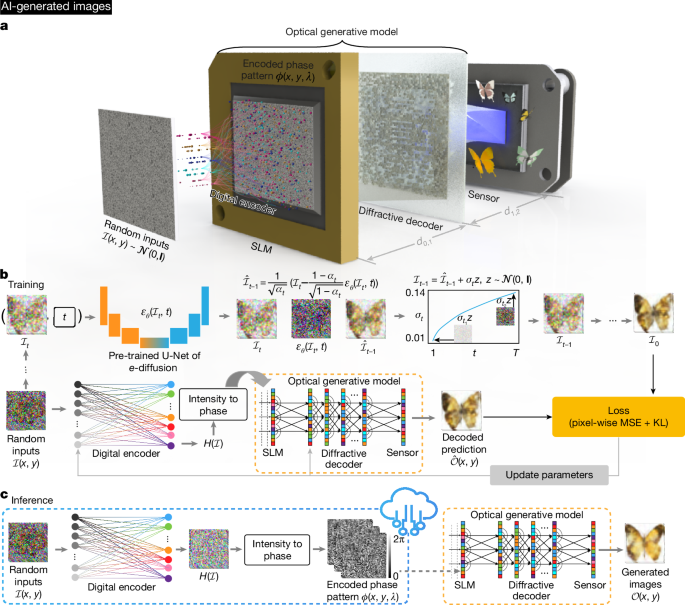

The snapshot optical image generation process is composed of two main components: a digital encoder and an optical generative model. For generating images from datasets like MNIST, Fashion-MNIST, Butterflies-100, and Celeb-A, we used a modified multi-layer perceptron (MLP) architecture featuring Ld fully connected layers, each utilizing an activation function κ. The digital encoder processes an input signal drawn from a standard normal distribution \({\mathcal{I}}(x,y) \sim {\mathcal{N}}({\bf{0}},\,{\bf{I}})\). It outputs an encoded signal \({{\mathcal{H}}}^{({l}_{d})}\) along with a scaling factor s, where the reshaped one-dimensional signal becomes a two-dimensional signal \({{\mathcal{H}}}^{({L}_{d})}\in {{\mathbb{R}}}^{h\times w}\).

For generating Van Gogh-style artworks, the encoder comprises three components: a noise feature processor, an in silico field propagator, and a complex field converter. These elements convert the randomly sampled input to a two-dimensional output \({{\mathcal{H}}}^{({l}_{d})}\in {{\mathbb{R}}}^{h\times w}\), which is then forwarded to the optical generative model.

Optical Generative Model Construction

The optical generative model consists of a spatial light modulator (SLM) and a diffractive decoder made up of Lo decoding layers. To construct the encoded phase pattern \(\phi (x,{y})\) projected by the SLM, the normalized real output \({{\mathcal{H}}}^{({L}_{d})}\) is rescaled within the range \([0,\alpha {\rm{\pi }}]\). Upon constructing the incident optical field profile \({{\mathcal{U}}}^{(0)}(x,y)=\cos (\phi (x,y))+i\sin (\phi (x,y))\), its propagation through air is modeled by the angular spectrum method. The output complex field \({\mathcal{o}}(x,y)\) can be computed via iterative applications of free-space propagation and phase modulation mechanisms.

Training Strategy for Optical Generative Models

The primary goal of the generative model is to understand the underlying data distribution \({p}_{{\rm{data}}}{\mathscr{(}}{\mathcal{I}}{\mathscr{)}}\, enabling it to produce new data samples that adhere to \({p}_{{\rm{model}}}({\mathcal{I}})\. For training, we initially employed a digital generative model based on DDPM to learn the data distribution. After gaining insights from this teacher model, we trained the snapshot optical generative model, focusing on minimizing the mean square error (MSE) along with Kullback–Leibler (KL) divergence.

Implementation and Datasets

The datasets, MNIST and Fashion-MNIST, feature class labels, allowing the digital encoder’s first layer input to include both flattened images and embeddings of class labels. For datasets lacking explicit labels like Butterflies-100 and Celeb-A, the input remains as flattened images. The image resolutions across all datasets are fixed at 32 × 32. The encoding processes utilize LeakyReLU activations and a specific setup focusing on energy-efficient image generation through various SLM configurations and operational wavelengths.

Performance Analysis and Comparison

Our performance analysis revealed that snapshot optical generative models can match the image generation capabilities of deeper digital models while maintaining fewer resources. We also encountered challenges during configurations lacking diffractive decoders and class embeddings, demonstrating their importance in improving output quality. Moreover, variations in phase modulation levels highlighted the models’ resilience against random misalignments. The investigation into the energy efficiency of the optical generative frameworks leads us to conclude that their applications lie in fields requiring real-time image generation or visual secure communication.